Introduction

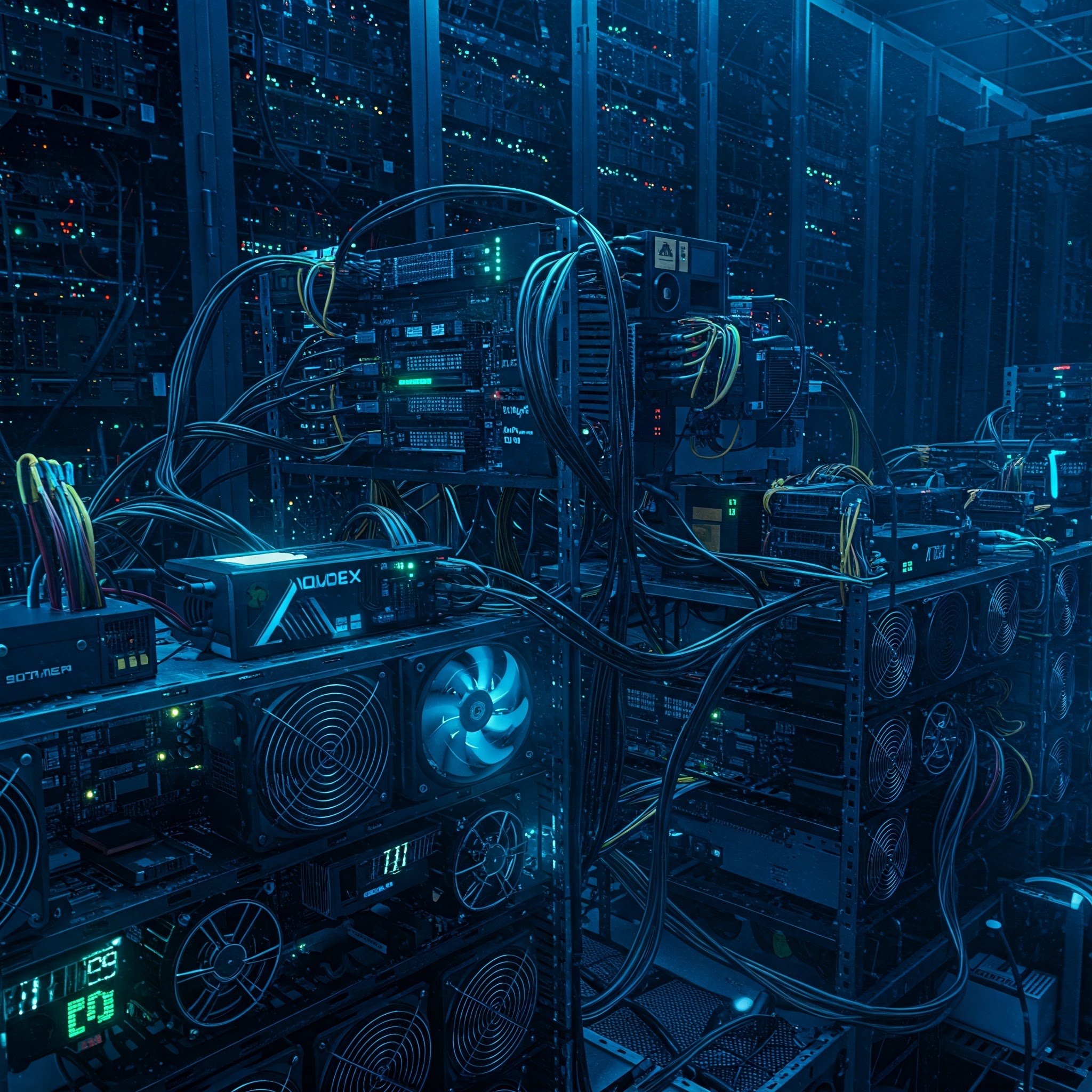

The rapid adoption of AI systems has brought unprecedented capabilities to organizations worldwide. However, with these advances come new security challenges that are often overlooked. Our recent research reveals a concerning vulnerability in GPU-accelerated AI systems that could allow attackers to execute malicious code and hijack valuable computational resources.

Novel Attack Vector: GPU-Based Cryptomining

While deserialization vulnerabilities and custom layer exploits are well-known attack vectors in AI systems, our research demonstrates a novel application of these vulnerabilities: using them to deploy cryptocurrency miners that specifically target GPU resources. This attack leverages two common entry points in AI systems:

- Deserialization Process: When AI models are saved and loaded, they go through serialization and deserialization. Attackers can exploit this process to inject malicious code that hijacks GPU resources.

- Custom Layer Features: AI frameworks’ custom computation layers, such as TensorFlow’s Lambda layers, can be manipulated to execute unauthorized code that targets GPU resources for cryptocurrency mining.

Why This Matters

The implications of these vulnerabilities are significant for several reasons:

- Resource Theft: Attackers can hijack expensive GPU resources for cryptocurrency mining or other unauthorized purposes

- Detection Challenges: The parallel nature of GPU processing makes it extremely difficult to detect malicious activity

- Financial Impact: GPU resources are costly, and unauthorized usage can lead to significant financial losses

- Operational Disruption: Compromised GPU resources can affect the performance of legitimate AI workloads

Real-World Impact

To demonstrate the severity of these vulnerabilities, we developed a proof-of-concept attack that deploys a cryptocurrency miner through a compromised AI model. The attack seamlessly blends with normal AI operations, making it particularly difficult to detect through conventional monitoring tools.

Protecting Your AI Systems

To safeguard your AI infrastructure against these threats, we recommend:

- Implement Strong Validation: Always validate serialized models before loading them into your system

- Restrict Custom Computations: Limit the use of custom layers in production environments

- Use Secure formats: Employ frameworks like “safetensors” that provide secure serialization alternatives

- Regular Audits: Conduct thorough code reviews and security audits of AI models before deployment

- GPU Monitoring: Implement specialized monitoring tools for GPU workloads

Looking Ahead

As AI systems become more prevalent, securing GPU resources will become increasingly critical. Organizations must adapt their security strategies to address these new challenges, particularly in environments where AI models are deployed at scale.

Additional Information

The full technical details of our research, including detailed vulnerability analysis and mitigation strategies, are available in our comprehensive paper “Crypto Miner Attack: GPU Remote Code Execution Attacks.”

—

Read the full research here: https://arxiv.org/abs/2502.10439

For more information about our security research and services, visit nextsec.ai

© 2025 NextSec.ai. All rights reserved.